Source(s): GPT-4 Passes the Bar Exam

Published by: Daniel Martin Katz, Michael James Bommarito, Shang Gao, and Pablo Arredondo

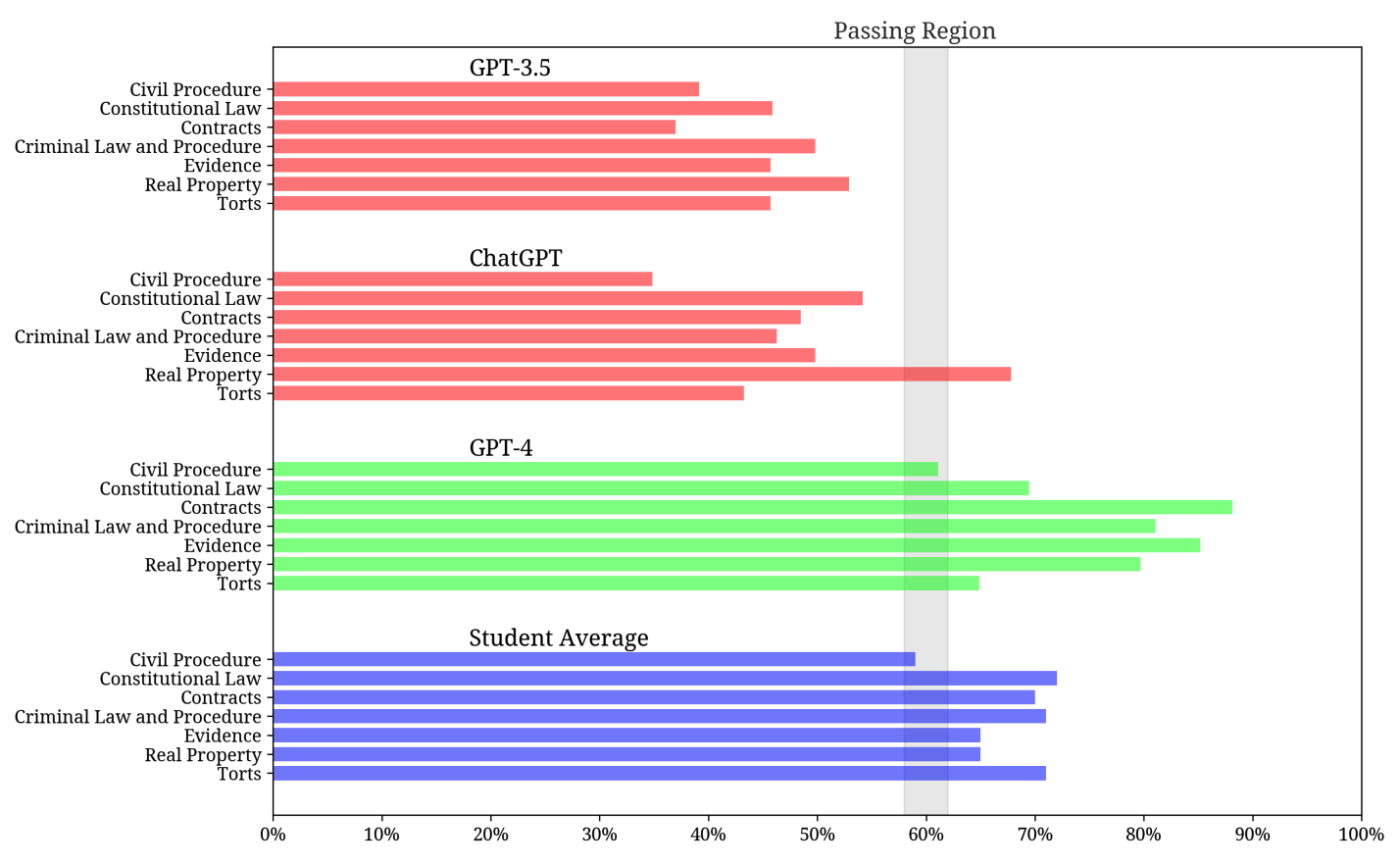

“On the MBE, GPT-4 significantly outperforms both human test-takers and prior models, demonstrating a 26% increase over ChatGPT and beating humans in five of seven subject areas. On the MEE and MPT, which have not previously been evaluated by scholars, GPT-4 scores an average of 4.2/6.0 as compared to much lower scores for ChatGPT. Graded across the UBE components, in the manner in which a human test-taker would be, GPT-4 scores approximately 297 points, significantly in excess of the passing threshold for all UBE jurisdictions.”

Casetext Press: CoCounsel is powered by OpenAI’s GPT-4, the first AI to pass the bar